Dedup Forwarder Introduction

LogZilla documentation for Dedup Forwarder Introduction

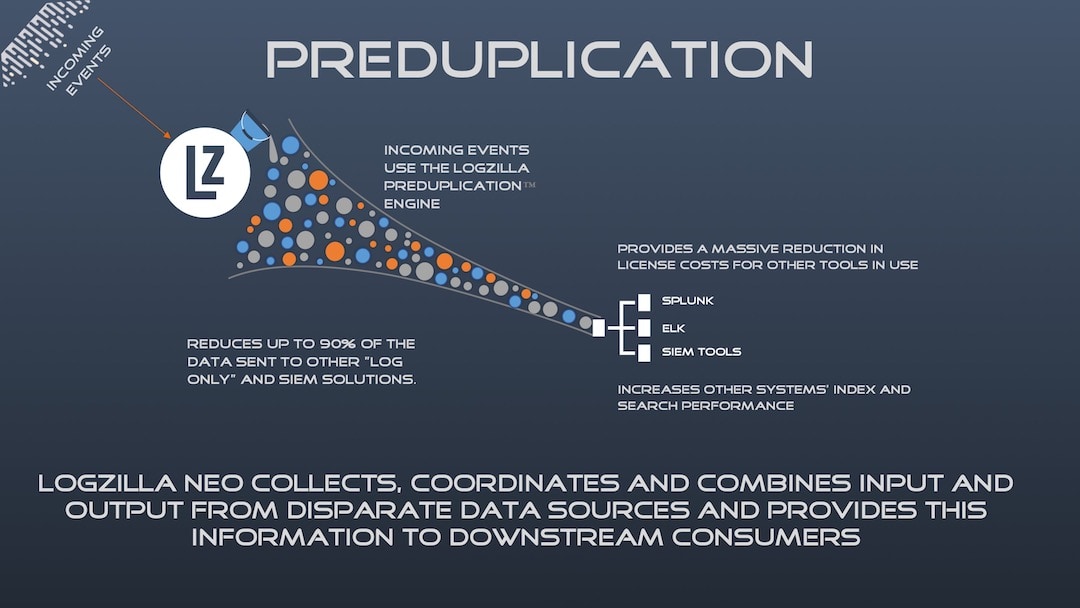

Deduplication and Forwarder Overview

LogZilla performs deduplication at ingest to collapse repeated events into a single forwarded event per window, with a count of occurrences. This reduces downstream CPU, storage, and licensing impact during event storms, while preserving the signal needed for operations.

Deduplication process

In large environments, devices may emit the same event repeatedly (for example, during a link flap or authentication failure). Deduplication at ingest ensures downstream receivers handle a small, meaningful set of events rather than an overwhelming flood.

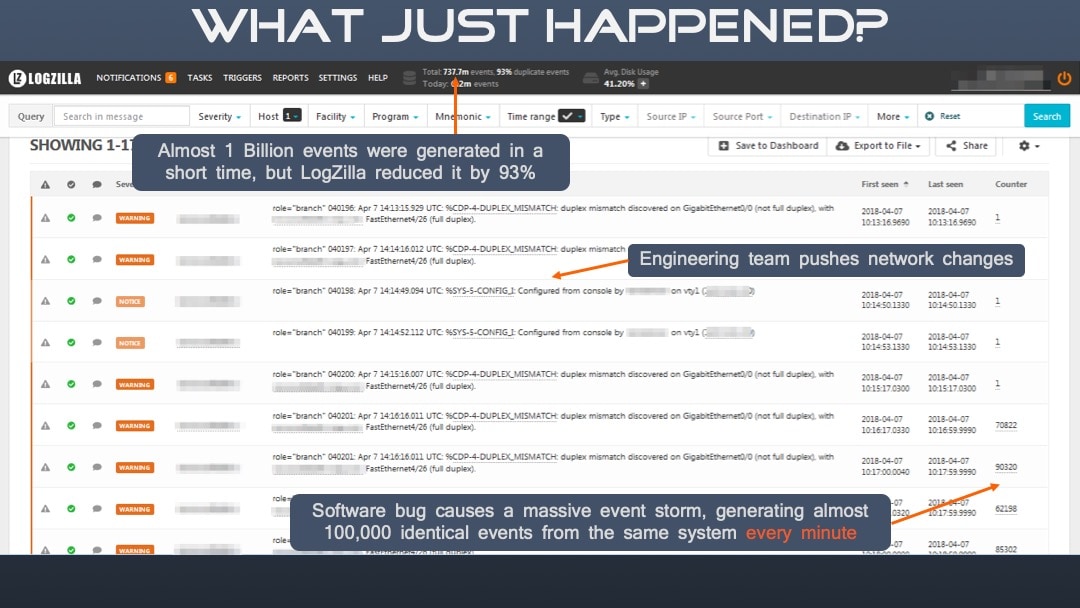

Event storm example

In the scenario above, hundreds of thousands of duplicate events could be reduced to just a handful, with a counter indicating how many times each unique event occurred.

Forwarding options

LogZilla can forward events as a log or a trap, regardless of how the event was received (syslog, HTTP receiver, traps, or app inserts). The module applies deduplication first, then applies any forwarder rules, and finally delivers to the configured destination(s).

Configuration locations and workflow

/etc/logzilla/forwarder.yaml(or.json): Global forwarder file. It is created automatically on first use with sane defaults (window_size: 60,fast_forward_first: true). Most users should not modify this file directly./etc/logzilla/forwarder.d/: Recommended location. Place individual forwarders here, one forwarder per file (YAML or JSON). This keeps configurations modular and easier to manage.

After adding or changing forwarders:

bash# Inspect the merged forwarder configuration (useful for syntax checks)

logzilla forwarder print

# Reload the forwarder to apply changes

logzilla forwarder reload

To enable the Forwarder module feature flag:

bashlogzilla settings update FORWARDER_ENABLED=true

For full CLI usage, refer to:

- System commands:

/help/command_line_tools/system_commands - Data commands (forwarder):

/help/command_line_tools/data_commands